Growing up in North London, Demis Hassabis had a passion for games. His experience as a member of England’s junior chess teams shaped his initial desire to become a word champion. But although that didn’t work out, his efforts to improve at the game led him to ponder his own thought processes, which sparked a deeper fascination with the brain.

“As I grew older, acquiring my first computer and learning programming, I realised the incredible potential of machines as tools for the mind, capable of performing tasks even while we sleep,” he recalls. “This realisation steered me towards AI, with the aim of making machines intelligent.”

During the 1990s, Hassabis was drawn to the gaming industry, where he helped create AI for commercially successful games. Then the 2000s brought significant advancements in neuroscience, including fMRI and other groundbreaking technologies, compelling him to pursue a PhD. This journey of gathering ideas and insights ultimately led to the founding of DeepMind in 2010.

“My career trajectory makes sense when considering my long-standing ambition to create something akin to DeepMind, which aims at artificial general intelligence (AGI),” he says. “When we launched nearly 15 years ago, the optimal methods for achieving AGI were unclear. We placed our bets on two foundational principles: 1) the capability of systems to learn autonomously, diverging from traditional AI systems that were hardcoded with logic-based solutions, and 2) the principle of generality, meaning the ability of a single system to learn and apply knowledge across a broad range of tasks. These principles, we believed, were essential markers of true intelligence, a belief that has been validated over time as these systems have scaled impressively.”

The road to AGI

Inspiration from neuroscience played a significant role in shaping these early ideas, influencing the development of neural networks and reinforcement learning. Now Google DeepMind, as it's called since having been acquired by the tech giant in 2014, finds itself in a new phase where innovative practices and engineering at scale have become the primary focus for further development.

“AGI, by our definition, is a system capable of performing almost any cognitive task that humans can,” Hassabis explains. “This definition hinges on the human brain as the sole proven example of general intelligence. By emulating the wide range of cognitive abilities observed in humans, we aim to achieve a system that qualifies as generally intelligent.”

The emergence of AGI is a subject of intense debate and is something that might become immediately apparent or may require rigorous testing to verify.

But Hassabis believes that by evaluating systems across thousands of tasks humans perform and ensuring they meet a certain threshold, we can more confidently determine if and when we’ve achieved AGI. Also, the broader and more comprehensive the test set, the more certain we can be that we’ve encompassed the general space of human cognitive capabilities.

“The advancement towards AGI appears to be a gradual process rather than an abrupt leap,” he says. “Current systems are evolving rapidly, gaining power incrementally as we enhance our techniques, computing power, and data handling. There’s anticipation for significant innovations in areas like planning, memory, and tool use – capabilities currently absent in today’s systems – which could mark substantial progress. These developments are what we envisioned back in 2010, recognising that AI technologies would be profoundly beneficial for daily life and scientific research even before achieving AGI. Indeed, AI systems have become integral to our daily interactions, suggesting we are only beginning to uncover the potential applications and benefits that will emerge in the years leading up to AGI.”

From AlphaGo to AlphaFold

At the time of DeepMind’s founding, the potential of learning systems, particularly deep learning, which was then a recent academic development, had not yet been recognised within the industry. The company initially focused on games as a proving ground for its AI technologies, due to their utility as a versatile and rapid testing environment for algorithmic concepts. The focus was on Go, a game not yet mastered by conventional AI approaches (unlike chess, which had been conquered by IBM’s Deep Blue in the 1990s).

“Go’s complexity and reliance on patterns and intuition presented a unique challenge,” Hassabis says. “Our success with AlphaGo in 2015, achieving world champion status, marked a significant milestone, demonstrating the power of our learning algorithms and igniting a broader interest in the potential of these methods. This breakthrough was unexpected by many, who predicted it was a decade away, [and] underscored the versatility of our approach. This laid the groundwork for applications beyond gaming, in science and language processing.”

The next breakthrough came with proteins, which are involved in every bodily function and are therefore fundamental to life. Proteins start as a sequence of amino acids that then fold into 3D structures that determine their functions. The protein folding problem, a grand challenge in biology for 50 years, asks if we can predict a protein’s 3D structure from its sequence. Solving this would vastly aid drug discovery, disease understanding, and the comprehension of life itself.

“After years of effort, starting in 2016 and through to 2020, we achieved predicting protein structures from sequences in seconds, a task that traditionally could take years per protein,” Hassabis says. “With over 200 million known proteins, manually solving each one would have taken a billion years. We accomplished this in about a year with our technology.”

In the three years since AlphaFold was launched, it’s been used by more than a million researchers and biologists globally. Its success in advancing fundamental science led to the creation of the company Isomorphic Labs, which aims to leverage AI for drug discovery. Because understanding a protein’s structure enables targeted drug design, this can minimise side effects by ensuring compounds bind specifically to intended protein targets.

“Isomorphic Labs is now applying AI to create chemical compounds for precise drug targeting,” Hassabis says. “Recent collaborations with Eli Lilly and Novartis highlight the potential to expedite drug discovery processes, reducing the typically decade-long journey to potentially just months, with the goal of addressing critical diseases more efficiently.”

Dissecting and analysing technology

Where to from here? The company is already expanding its focus to several crucial areas, including material science, where the discovery of new materials, such as a potential room-temperature superconductor, is a significant goal. This ambition highlights the untapped possibilities within chemical space that it aims to explore using AI.

“Over the next few years, I anticipate human experts will increasingly use powerful AI tools to enhance their knowledge,” Hassabis says. “Currently, it remains the responsibility of these experts to formulate hypotheses and identify the issues they want these systems to address, as well as to interpret the insights generated. However, looking a decade or more ahead, there’s the potential for these systems to begin generating their own conjectures. By integrating such systems with advanced language models, we might reach a point where we can request explanations for their processes, akin to consulting a leading scientist.”

Hassabis believes that as we begin to understand the complexity of AI systems, we are also recognising the need for extensive research to dissect and analyse these technologies beyond merely enhancing their performance. This involves studying how AI constructs knowledge representations, how to control and predict their actions, and establishing safeguards for their operation. This endeavour might well necessitate a new branch of science as AI, in essence, is an engineering science.

“Unlike natural sciences where phenomena are observed in the world, in AI the artifact must first be constructed before it can be studied scientifically,” he says. “Only in recent years have we developed systems sophisticated and useful enough to merit such detailed investigation, much like the scrutiny applied in natural sciences. Given the complexity of these engineered systems, understanding them is as challenging as understanding natural systems explored in fields like physics, biology, and chemistry.”

First, do no harm

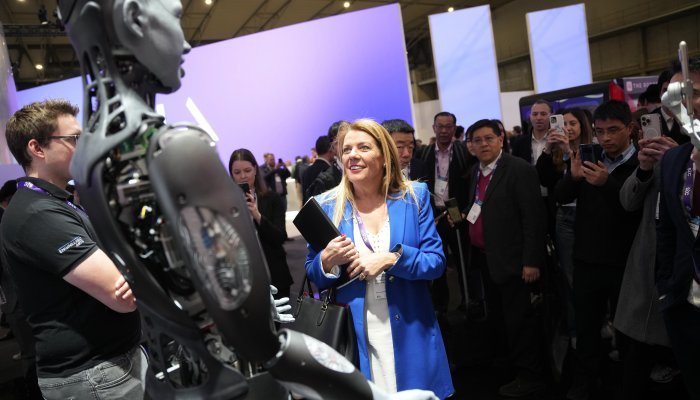

While this period represents an exciting time of accelerated progress in AI, driven by both groundbreaking research and the innovative application of existing technologies at scale, one area of worry is misuse by bad actors. To that end, Google DeepMind recently formed a new organisation called AI Safety and Alignment to address these and other concerns.

“Open sourcing, a practice we strongly support, presents its own set of challenges in this context,” Hassabis says. “While it democratises access to AI technologies, ensuring their responsible use downstream becomes increasingly complex. We’ve released open-source versions of our systems to support the developer and enterprise community. However, as these systems advance, society must grapple with the question of how to prevent their exploitation by individuals or states with harmful intent.”

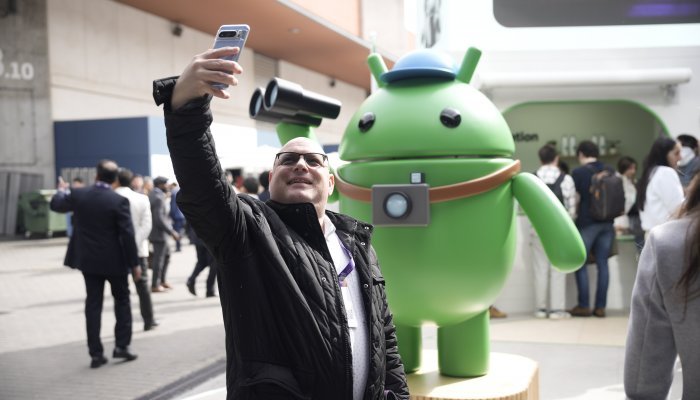

Hassabis believes that the integration of AI into mobile technology presents a significant opportunity for innovation. With the development of advanced digital assistants, the potential for creating next-generation smart assistants that are truly useful in daily life is enormous, moving beyond the gimmicky nature of previous iterations. This evolution raises important questions regarding the optimal device type for facilitating such interactions.

“Looking forward five years or more, we may find that smartphones aren’t ideal for maximising the benefits of AI in our daily lives,” he says. “Alternatives such as smart glasses or other wearable technologies could offer more contextually aware and immersive experiences by allowing AI systems to better understand the user’s environment. This prospect suggests that we are on the cusp of discovering and inventing new ways to integrate AI seamlessly into our lives, potentially transforming how we interact with technology and each other.”

Demis Hassabis is the co-founder and CEO of Google DeepMind. He has won many prestigious international awards for his research work, including the Breakthrough Prize in Life Sciences, the Canada Gairdner Award, and the Lasker Award. His work has been cited more than 100 000 times and he is a Fellow of the Royal Society and the Royal Academy of Engineering. www.deepmind.google.